The need for a holistic approach

Establishing a data lakehouse is not a value proposition on its own. It is the analytical processes and applications that it supports that determine the actual value impact to the organization. It is, therefore, crucial to keep use cases and business processes that need to be optimized in mind when starting the build-out of a data lakehouse.

Data need to be organized in fit-for-purpose data structures to balance cost and performance. Refresh cycles, real- or right-time requirements determine the approach to ingestion processes, and the analytical/AI-based result delivery processes to humans and other applications drive the approach to integration.

Only a holistic approach and a technology platform, which allows for the required flexibility and integrated approach between the data lakehouse and the AI/analytics based processes and applications, can provide the speed and agility to minimize time to value.

Creating a powerful data lakehouse with mcube™

Leveraging our end-to-end AI platform, mcube™, organizations can create robust data lakehouses, with the aim to streamline data management by integrating various data processing and analytics needs into one architecture. This approach helps avoid redundancies and inconsistencies in data, accelerates analysis throughput, and minimizes costs.

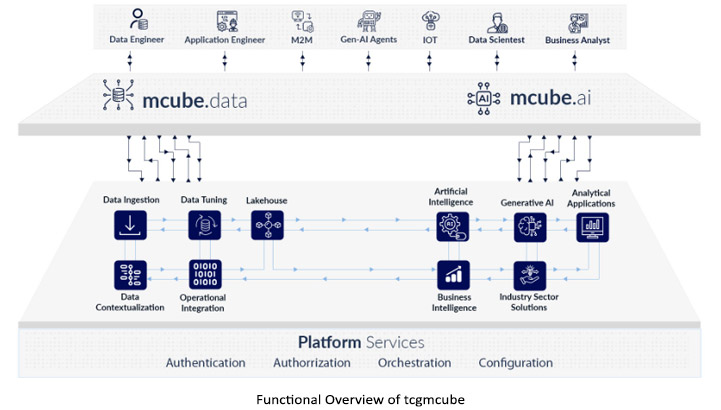

The platform mcube™ comes with mcube.data and mcube.ai, thus providing advanced analytics and AI capabilities and data management on the same platform managed by common platform services. This makes it an extremely powerful platform for implementing the lakehouse and deploying analytical and AI applications on top of the lakehouse.

The holistic impact of mcube™

As end-to-end data and AI/GenAI platform, mcube™ is designed to conquer the ever changing needs of organizations that are embarking on the journey of their digital transformation. The functional components within mcube.data and mcube.ai cover the breadth of capabilities needed for accelerated deployment cycles of traditional AI and generative AI-driven applications and business processes. The underlying platform services allow for enterprise-class management, monitoring, and compliance.

The data lakehouse solution powered by mcube™ provides users with the tools necessary for efficient data accessibility, collaboration, and integrity. It provides a technology platform that allows for the required flexibility and integrated approach between the data lakehouse the AI/analytics-based processes and applications. This approach provides agility that maximizes velocity to value for the business.