- January 10, 2025

A data lakehouse is an open data management architecture that combines the flexibility, cost-efficiency, and scale of data lakes with the data management capabilities of data warehouses. It enables dashboarding, traditional AI, generative AI and AI-based applications on accessible and transparent data. By bridging the gap between data lakes and data warehouses, the data lakehouse architecture provides users with the tools necessary for efficient data accessibility, collaboration, and integrity. As the various user communities continue to generate vast amounts of data, the adoption of data lakehouses will likely play a pivotal role in advancing innovation.

The need for a holistic approach

Establishing a data lakehouse is not a value proposition on its own. It is the analytical processes and applications that it supports that determine the actual value impact to the organization. It is, therefore, crucial to keep use cases and business processes that need to be optimized in mind when starting the build-out of a data lakehouse.

Data need to be organized in fit-for-purpose data structures to balance cost and performance. Refresh cycles, real- or right-time requirements determine the approach to ingestion processes, and the analytical/AI-based result delivery processes to humans and other applications drive the approach to integration.

Only a holistic approach and a technology platform, which allows for the required flexibility and integrated approach between the data lakehouse and the AI/analytics based processes and applications, can provide the speed and agility to minimize time to value.

Data need to be organized in fit-for-purpose data structures to balance cost and performance. Refresh cycles, real- or right-time requirements determine the approach to ingestion processes, and the analytical/AI-based result delivery processes to humans and other applications drive the approach to integration.

Only a holistic approach and a technology platform, which allows for the required flexibility and integrated approach between the data lakehouse and the AI/analytics based processes and applications, can provide the speed and agility to minimize time to value.

Creating a powerful data lakehouse with tcgmcube

Leveraging our end-to-end AI platform, tcgmcube, organizations can create robust data lakehouses, with the aim to streamline data management by integrating various data processing and analytics needs into one architecture. This approach helps avoid redundancies and inconsistencies in data, accelerates analysis throughput, and minimizes costs.

The platform tcgmcube comes with mcube.data and mcube.ai, thus providing advanced analytics and AI capabilities and data management on the same platform managed by common platform services. This makes it an extremely powerful platform for implementing the lakehouse and deploying analytical and AI applications on top of the lakehouse.

The platform tcgmcube comes with mcube.data and mcube.ai, thus providing advanced analytics and AI capabilities and data management on the same platform managed by common platform services. This makes it an extremely powerful platform for implementing the lakehouse and deploying analytical and AI applications on top of the lakehouse.

mcube.data

mcube.ai

mcube.data

mcube.data is designed to handle the complexity of today’s data landscape, covering structured, semi-structured, and unstructured data in real-time, near real-time, and batch environments. It comprises data ingestion, data storage, and data management features and comes with a semantic layer powered by knowledge graphs

- The Data Ingestion Layer of tcgmcube comes with pre-built standard connectors to various systems and instruments. It is highly interoperable and has pre-built connectors to instruments such as balance, DNA sequencer, gas chromatographs, pH meter, thermo-cycler, titrator, etc. It supports real-time data ingestion as well as batch ingestion, providing features for data transformations at various stages.

- For real-time AI (e.g., manufacturing 4.0 scenarios), the lakehouse supports data collection and data management at the edge before data gets transferred to the central lakehouse. This process handles network interruptions and other unforeseen events through data caches and synchronization capabilities. Built-in connectors of tcgmcube have been designed to support both OPC UA and OPC DA protocols, enabling the ingestion of near real-time tag data.

- The versatile data storage layer of tcgmcube comes with robust data management features. It leverages ontology management and knowledge modeling capabilities, making it “easy to get data out”, and has the following layers:

- Base data layer for source data processing, providing features to validate and catalogue the raw data

- Analytic Persistence layer with processed datasets for optimizing analytical queries and AI-driven processes

- Semantic Persistence Layer with contextualized data taxonomy through knowledge graphs

mcube.ai

mcube.ai comprises advanced multi-modal AI integrating deep learning, traditional AI, computer vision, and private LLM’s enhanced with knowledge graphs. It has a repository of 1000+ AI algorithms, supporting real-time AI and comprehensive model management. The analysis layer is powered by the semantic layer that makes it “easy to get data out” for analysis needs as it provides deep ontologies for domain contextualization. This block provides:

- Traditional AI at scale with a wide assortment of statistical, machine learning, deep learning, and optimization algorithms.

- Comprehensive Generative AI algorithms covering traditional LLMs (private and public LLMs for text data), multimodal LLMs (to include image data) and RAG models for fast information retrieval and complete source traceability.

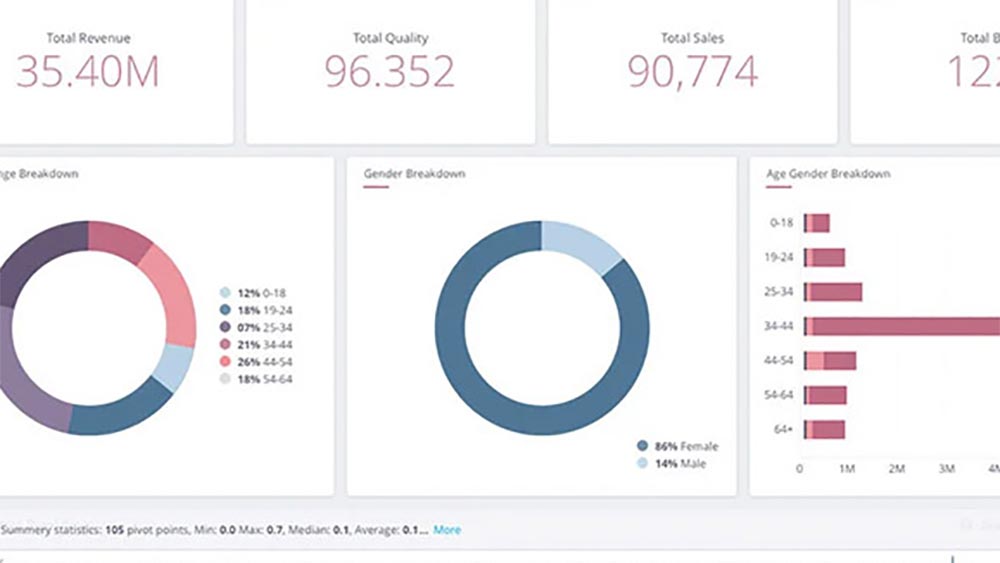

- Insights dissemination layer providing multiple user interfaces such as dashboards with easy business user self-service, operational reports, and low-code “upgrade safe custom screen painting”. The analytical applications and dashboards leverage the semantic layer for data interpretation and reporting.

- Action dissemination layer providing inputs to automated operational processes such as alerts, recommendations, action triggers, etc.

The Platform Services and Governance layer of tcgmcube helps implement enterprise-class governance practices to ensure data quality, security, and compliance.

Leveraging the core components of the platform, a data lakehouse can be created, bringing in data from diverse organizational sources and providing users powerful data interrogation and analysis capability. It uses the power of GenAI combined with that of ontology-driven semantic search to enable users to have a seamless conversation with their data.

Leveraging the core components of the platform, a data lakehouse can be created, bringing in data from diverse organizational sources and providing users powerful data interrogation and analysis capability. It uses the power of GenAI combined with that of ontology-driven semantic search to enable users to have a seamless conversation with their data.

The holistic impact of tcgmcube

As end-to-end data and AI/GenAI platform, tcgmcube is designed to conquer the ever changing needs of organizations that are embarking on the journey of their digital transformation. The functional components within mcube.data and mcube.ai cover the breadth of capabilities needed for accelerated deployment cycles of traditional AI and generative AI-driven applications and business processes. The underlying platform services allow for enterprise-class management, monitoring, and compliance.

The data lakehouse solution powered by tcgmcube provides users with the tools necessary for efficient data accessibility, collaboration, and integrity. It provides a technology platform that allows for the required flexibility and integrated approach between the data lakehouse the AI/analytics-based processes and applications. This approach provides agility that maximizes velocity to value for the business.

The data lakehouse solution powered by tcgmcube provides users with the tools necessary for efficient data accessibility, collaboration, and integrity. It provides a technology platform that allows for the required flexibility and integrated approach between the data lakehouse the AI/analytics-based processes and applications. This approach provides agility that maximizes velocity to value for the business.